The Great Consolidation: An Inflection Point

Every era of technological innovation begins and ends the same. At the start, there’s a rush of ideas and a flood of startups launching products, platforms, and services, each battling to stake a claim in the tech stack. But what begins as fragmented and abundant opportunity inevitably collapses into concentrated power. A handful of giants seize the choke points, fortify new moats, absorb the best, and leave the rest to vanish.

Today’s tech market is setting the stage for the Great Consolidation. Competitive pressures are intensifying on multiple fronts due to tighter capital, rising infrastructure costs, a slowing global economy, and an unprecedented build-out of AI infrastructure. Incumbents are responding by integrating and embedding generative AI models into their clouds, operating systems, browsers, and apps to reinforce the choke points they already control. Meanwhile, challengers are pioneering AI-powered Integrated Development Environments (IDEs) that enable AI agents to manage the entire software development lifecycle, from coding to deployment and monitoring. This effectively turns software development into a seamless service. The race to achieve full-stack platform scope and scale distribution will ultimately determine who dominates the next era of the digital ecosystem.

And these dynamics aren’t happening in a vacuum. Stepping back, the bigger picture reveals mounting pressure on the U.S. economy. The United States is burdened with record national debt ($37T+), a cooling labor market with slower hiring and creeping unemployment, elevated interest rates weighing on growth and capital formation, and a dollar whose dominance is eroding under pressure. Meanwhile, the U.S. stock market has never been more dependent on technology, with roughly 34% of the S&P 500’s value concentrated in the Magnificent Seven. And that concentration is no accident. The United States is not only the global leader in finance and technology, but also the issuer of the world’s reserve currency and the home of the digital infrastructure giants that define the modern AI economy.

And with similar and in many cases worse economic conditions across the globe, the critical question becomes, where will future growth come from to fuel stability and prosperity?

Global stability and prosperity depend on a single outcome, the United States technology sector must win, and win big.

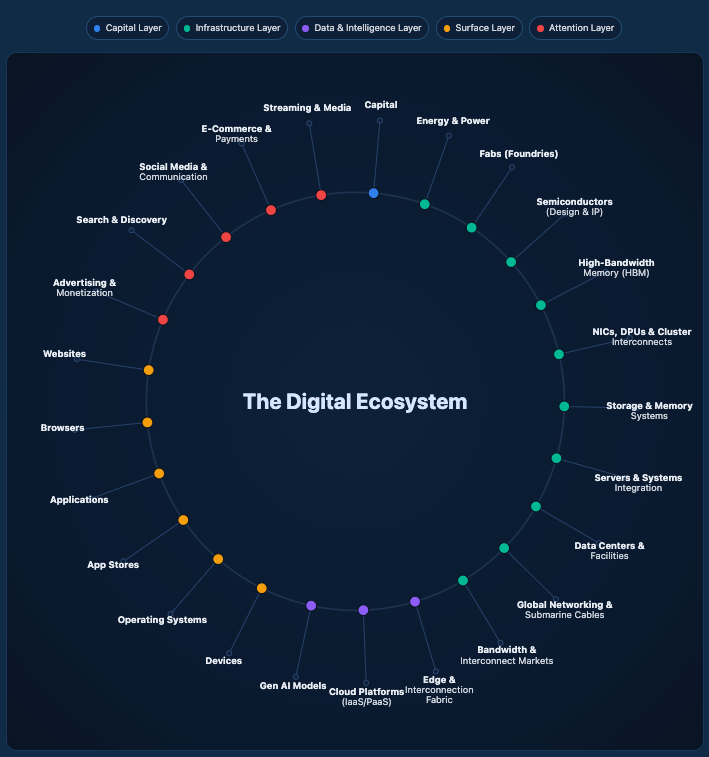

And if U.S. technology must win, then the AI economy is its growth engine. The race is no longer just about platforms, attention, and distribution, it’s about who controls the choke points of the value chain: from semiconductor fabs and high-bandwidth memory, to GPUs and AI chips, to cloud infrastructure and data centers, and ultimately to the operating systems that connect billions of people across the globe. And if generative artificial intelligence is the global growth engine, then innovation and consolidation are the levers that will reshape the digital economy.

Digital Ecosystem: Critical Choke Points

The digital ecosystem is defined today by five critical choke points that reveal how power is consolidating into the hands of a few giants: semicon. The winners won’t just shape the future of technology, they will set the course of the global economy. Artificial intelligence isn’t just accelerating this shift, it’s driving it.

Choke Point #1: Semiconductor Fabs

The ultimate choke point is not the cloud or the operating system, it’s the fabs. These semiconductor factories transform silicon wafers into working chips, the physical foundation of all compute. Each advanced fab requires $20-$30 billion in capital and years to bring online, and even then, the expertise and supply chains cannot be easily replicated. Today, nearly all advanced capacity is concentrated in just a handful of companies and countries, led by TSMC (~71%) in Taiwan, Samsung (~8%) in South Korea, and Intel in the United States. That geographic concentration makes fabs not just a technological bottleneck, but a geopolitical fault line in the global economy.

That fragility has triggered a race to rebalance supply within the U.S. With the United States CHIPS Act as the market driver: from Intel’s $60B buildout in Arizona and Ohio, to TSMC’s $40B Arizona fabs, to Samsung’s $17B Texas project. Together these investments signal America’s attempt to secure the first choke point, the physical foundation of compute itself. And yet, even with these historic investments most new capacity won’t come online until 2027 or later, leaving the fab bottleneck as the defining constraint on AI production and the digital economy for years to come.

Choke Point #2: GPUs, AI Hardware & Software

But fabs are only the beginning. Above them, GPUs and AI chips act as specialized accelerators that transform silicon into usable compute. Today, Nvidia dominates this layer, controlling roughly 80% of the global AI GPU market. But its power doesn’t stop at hardware. The CUDA software stack has become the default language for AI development. Every major framework (PyTorch, TensorFlow, JAX) is optimized to run on it. Once code is written and trained on CUDA, moving to another vendor requires costly rewrites, re-optimizations, and efficiency losses. This combination of hardware dominance and software lock-in has made GPUs and AI chips the second biggest choke point in the digital economy.

Capital is flooding into challengers. AMD’s MI300 accelerators are gaining traction in high-performance computing, Intel’s Gaudi line continues to push for relevance, and hyperscalers are designing their own silicon: Google TPU, AWS Trainium, and Microsoft Maia. Startups like Cerebras, which finalized a $1.1B Series G today (Sept 30, 2025), Groq ($750M Series E, Sept 2025), and Tenstorrent ($693M Series D, Dec 2024) have raised large rounds to break Nvidia’s grip. Yet despite this wave of investment, no competitor has cracked the combination of scale, ecosystem, and software entrenchment that keeps Nvidia unavoidable. The U.S. government knows it, which is part of why export controls on advanced GPUs are among the most sensitive levers in global tech policy today.

Choke Point #3: High-Bandwidth Memory (HBM)

If GPUs are the engines of AI, then high-bandwidth memory is the fuel line. Without it, accelerators can’t train or operate AI models. The market is concentrated among just three companies: SK Hynix (~62%), Micron (~21%), and Samsung (~17%). With 100% of global supply controlled by this oligopoly and production perpetually constrained, HBM has become a choke point in its own right, one whose scarcity directly throttles the pace of GPU and AI chip production worldwide.

As demand surges alongside GPU shipments, supply remains chronically tight and often sold out months in advance. Micron, the lone U.S. HBM supplier, has already sold out most of its 2026 capacity and expects to be fully booked before the end of this year. That scarcity is why private capital is betting heavily on the future of AI memory. Micron itself is investing $100 billion in a New York mega-site dedicated to advanced memory production, a project central not only to its growth but also to U.S. leadership in the AI supply chain.

Choke Point #4: Cloud Infrastructure

If fabs, HBMs, and GPUs provide the raw compute, cloud infrastructure determines how that compute is distributed, orchestrated, and scaled. Amazon AWS (~30%), Microsoft Azure (~20%), and Google Cloud (~13%) together control roughly 63% of the global market. These hyperscalers have become the backbone of the AI economy. They have done this by transforming advanced infrastructure into on-demand utilities, evaluating the cloud from a simple service to the control layer for how people, businesses, and governments access and harness compute worldwide.

The sheer scale of hyperscaler spending underscores why the cloud has become its own choke point. In 2025, Amazon leads with an estimated $118B in CapEx, with the majority directed toward AWS data centers and GPU capacity. Google follows at ~$85B, focused on AI and cloud infrastructure. Microsoft is pacing toward ~$80B, with most of that aimed at AI-enabled data centers. The breadth of services, scale of infrastructure, depth of integration, and sheer capital intensity make cloud infrastructure one of the most entrenched choke points in the digital economy for the foreseeable future. This level of spending cements the distribution of compute, cloud infrastructure, and AI-as-a-Service in the hands of just three providers.

Choke Point #5: Operating Systems

If the cloud is the backbone of the AI economy, operating systems are the access layer, the bridge between humans and devices. They dictate how billions of people and businesses access information, analytics, applications, browsers, content, commerce, and now generative AI. Google commands this layer through Android and Chrome, Microsoft through Windows, and Apple through iOS, macOS, and Safari at the premium end of devices. The Browser era once the dominant interface for searching, scrolling, shopping, and advertising is ending.

As of late 2025, the shift to native AI integration has begun. Large Language Models (LLMs) are being embedded directly into the operating system, not as apps but as core function. Microsoft integrated a preview Copilot into Windows 11 in September 2023, and has continued to deepen this integration as a core system feature. Apple announced Apple Intelligence in June 2024, with initial beta integrations for iOS 18.1, iPadOS 18.1 and macOS Sequoia 15.1 in September 2025. Google has been rolling out Gemini integrations into Chrome and the Android system throughout 2024 and 2025, starting with contextual search and workspace tools, with deeper integration across its ecosystem ongoing as of late 2025.

Microsoft, Apple, and Alphabet are leveraging their choke point distribution layers to defend their moats. Not just against each other, but also against AI challengers like OpenAI, Anthropic, Replit, and Perplexity. These challengers lack direct control of the device and OS, the default surfaces of human-device interaction. As user behavior shifts toward OS native-AI experiences, reaching consumers through standalone apps or browsers eventually won’t be possible. That shift will force challengers to find new routes to scale distribution, such as deep enterprise partnerships with the cloud platform players or specialized hardware ecosystems where incumbents have yet to claim full control.

Generative AI Business Engine-as-a-Service

Alphabet Inc. and Microsoft now stand alone as the only companies in the world with true end-to-end vertical and horizontal control of compute distribution, spanning:

Core Technology Stack: Cloud infrastructure, devices, operating systems, browsers, app stores, apps, enterprise software, and in-house AI models.

Physical Infrastructure: Global data center networks, proprietary networking hardware, and extensive owned terrestrial and subsea fiber optic cable networks.

Semiconductor Design: In-house expertise in designing custom silicon, including CPUs, SoCs, and specialized AI accelerators (TPUs, Maia), allowing for co-optimization of hardware for software.

Ecosystem and Capital: The ability to leverage massive financial capital for multi-billion dollar infrastructure investments, as well as influence the broader industry through leadership in open-source standards, strategic partnerships, and AI accelerator programs.

That unparalleled positioning opens a clear path for them to deliver a conceptual Generative AI Business Engine-as-a-Service, a system capable of transforming natural language into operational capability at scale.

To anyone on the on earth or in space, with a device and an internet connection. Through conversational text, or speech, individuals or businesses could develop new technology, or even entire businesses on demand, and continue to run, optimize, and scale them seamlessly within a simple conversation.

By doing so, Alphabet Inc. and Microsoft would not just redefine access to technology, but also rewrite the very possibilities of innovation, entrepreneurship, and wealth creation.

The Great Consolidation isn’t coming . . . it’s here.

This was Edition #1 of Techonomics.

Stay tuned, we’re just getting started.

— Craig Sweeney-Draude

Founder, Techonomics